EcoCAR Mobility Challenge

Introduction

EcoCAR Mobility Challenge is the newest U.S. Department of Energy (DOE) Advanced Vehicle Technology Competition (AVTC) series challenging 12 North American university teams. It is sponsored by DOE, General Motors and Mathworks and managed by Argonne National Laboratory. Teams have four years to redesign a 2019 Chevrolet Blazer and apply advanced propulsion systems, electrification, SAE Level 2 automation, and vehicle connectivity. Teams will use different sensors and wireless communication devices to design a perception system for the vehicle and deploy VehicleToX (V2X) communication to improve overall operation efficiency in the connected urban environment of the future.

The Society of Automotive Engineers (SAE) provides a taxonomy for six levels of driving automation, ranging from no driving automation (level 0) to full driving automation (level 5). SAE Level 2 automation refers to a vehicle with combined automated functions, like acceleration and steering, but the driver must remain engaged with the driving task and monitor the environment at all times.

The SAE Levels of Automation (Source: [NHTSA](https://www.nhtsa.gov/technology-innovation/automated-vehicles-safety))

CAVs Team

In the Connected and Automated Vehicle Technologies (CAVs) swimlane, teams will implement connected and automated vehicle technology to improve the stock vehicle’s energy efficiency and utility for a Mobility as a Service (MaaS) carsharing application.

In particular, the CAVs team is tasked with design and implementation of perception and control systems needed for an SAE Level 2 autonomous vehicle. To design the perception system, teams can integrate different sensors such as cameras, Radars and LiDARs into their vehicles. The CAVs team is also tasked with the design and implementation of a V2X system capable of communicating with infrustructures such as smart traffic lights, or other vehicles with a V2X system.

Perception System Design

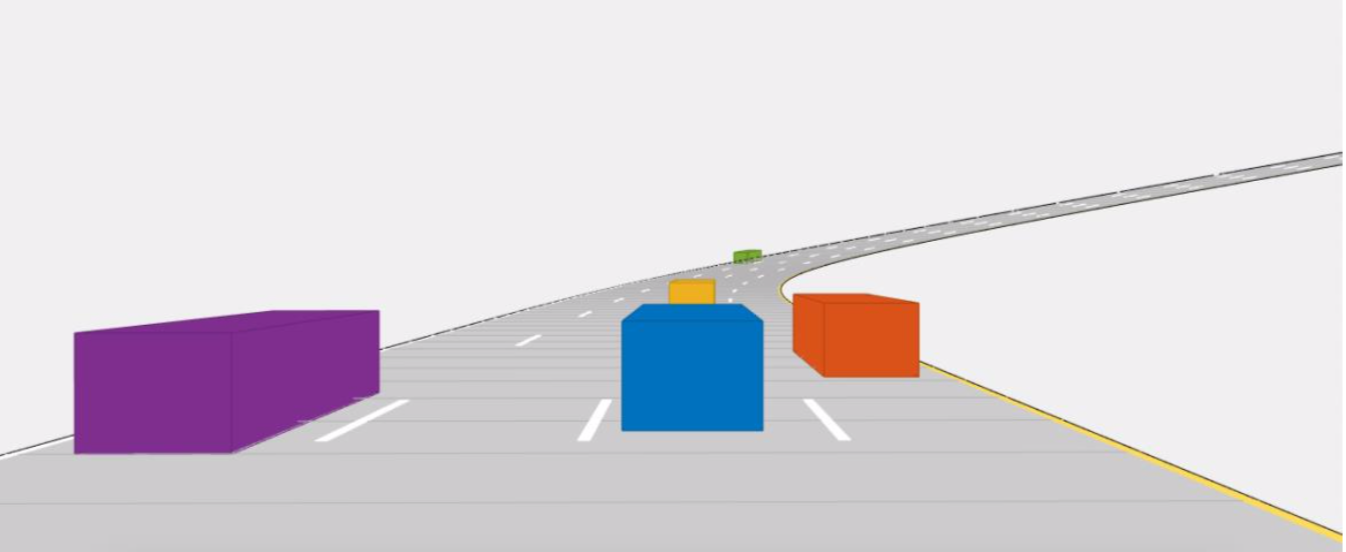

Designing a perception system for the vehicle requires a lot of simulation to determine the sensor placement on the vehicle. Matlab’s Automated Driving System Toolbox provides algorithms and tools for designing and testing ADAS and autonomous driving systems, including tools for sensor placement and Field of View (FOV) simulation. The following figure shows a simple cuboid simulation in this toolbox using the Driving Scenario Designer app.

Sample Simulation from Matlab's Driving Scenario Designer App

After designing and simulating the sensor placements, the sensors are mounted on a mule vehicle to collect real-world data and verify the simulation results. The following video shows the data collected from a LIDAR, front camera and front Radar mounted on a Chevrolet Camaro while driving on highway. It also displays the outputs of the diagnostic tools designed to monitor the sensor data for possible sensor failure or any other issues while recording the data.

References

- EcoCAR Mobility Challenge Website

- AVTC on Flicker

- National Highway Traffic Safety Administration, Automated Vehicles for Safety

- The Society of Automotive Engineers, Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles